Playlist

Show Playlist

Hide Playlist

Comparing two Means

-

Slides Statistics pt2 Comparing two Means.pdf

-

Download Lecture Overview

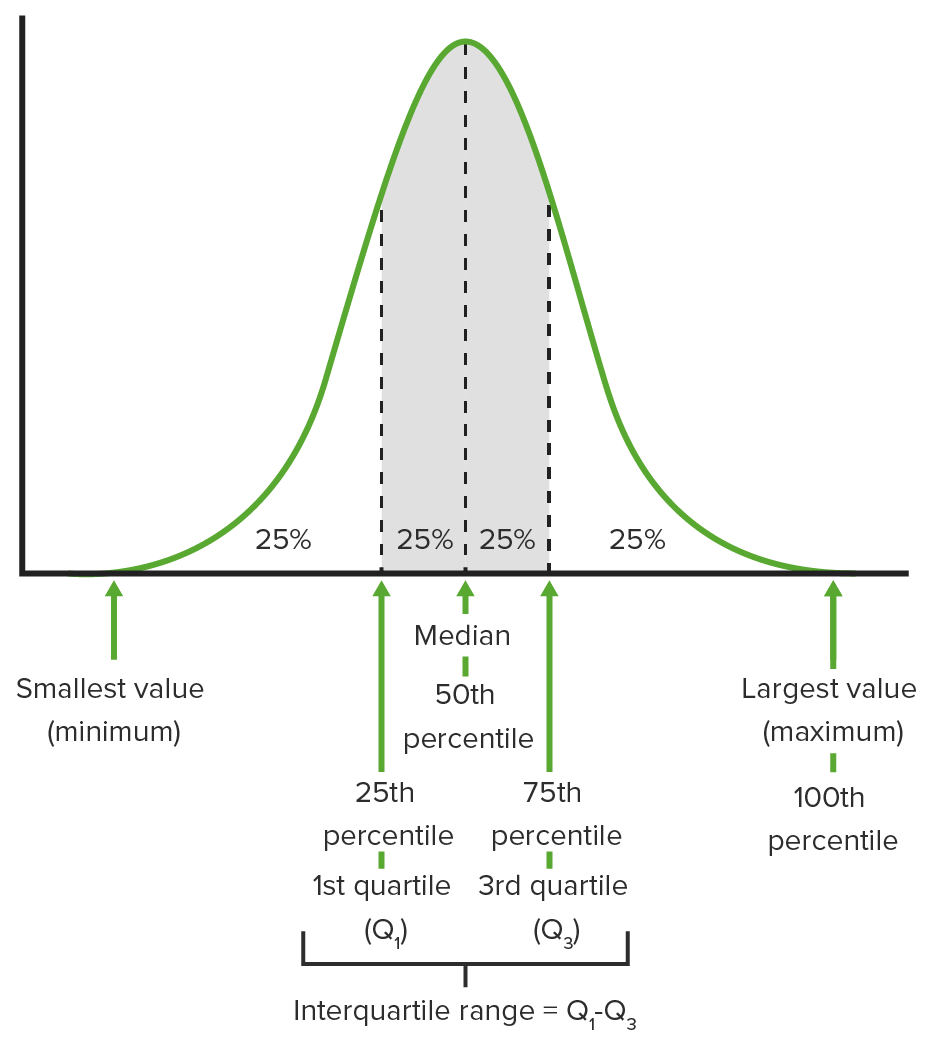

00:01 Welcome back for lecture 7 in which we'll discuss how we compare two means. 00:06 So let's start with an example. 00:08 We'll do with batteries and the question is: Should we buy generic batteries or should we buy name brand batteries? We have a statistics student who designed a study to compare battery life. 00:19 And what he wanted to know was whether or not there was a difference between the lifetime of brand name and generic batteries. 00:25 So he kept the battery powered CD player, continuously playing the same CD with the volume at the same level and he measured the time until no more music was heard through the headphones. 00:36 So here are the data, we have six lifetimes for each type of battery and there they are. 00:42 And now the question is: Is there a difference between the average lifetime between the two types of batteries? So let's make a plot of the data. 00:50 Here are side by side boxplots of the generic battery life and the brand name battery life. 00:56 And how it looks is, the generic batteries appear to have a higher center. 01:00 So, our question is: Is this just random fluctuation? Can this difference be attributed strictly to chance? So we're gonna answer this question in the rest of this lecture. 01:11 So how do we do it? Well we have two means from independent populations. 01:16 So the first thing we need to do is find the standard deviation of the difference of the two means. 01:22 So if we let y bar 1 and y bar 2 be the means of independent random samples of sizes n1 and n2 from two independent populations where these populations have standard deviations sigma1 and sigma2, then what we know is that the variance of y bar 1 minus y bar 2 is equal to the variance of y bar 1 plus the variance of y bar 2. 01:46 We also know that variance of y bar 1 is sigma1 squared over n1 and the variance of y bar 2 is sigma2 squared over n2. 01:57 Therefore, the standard deviation of y bar 1 minus y bar 2, the difference in the sample means, is the square root of sigma1 squared over n1 plus sigma2 squared over n2. 02:09 The problem is, just like it was in proportions, we don't know the population standard deviations. 02:15 So we have to estimate them using the sample standard deviations, which will call s1 and s2. 02:21 So we use the standard error of the difference as the measure of the variability. 02:25 So we have the standard error of y bar 1 minus y bar 2 is equal to the square root of s1 squared over n1 plus s2 squared over n2. 02:34 So now we're gonna construct the confidence interval for the difference in the population means, mu 1 and mu 2. 02:40 The confidence interval look similar to other ones that we have seen. 02:44 We have y bar 1 minus y bar 2, plus or minus some margin of error. 02:49 So what is our margin of error? Well our margin of error is given by a critical t-value times the standard error of the difference in the sample means. 02:59 Now this t-distribution has to have some number of degrees of freedom. 03:03 And the degrees of freedom are given by this crazy formula that you see here. 03:08 And this formula usually doesn't give a whole number, so once we get the degrees of freedom out of this formula, we have to round it down if we're using a table so that we can be conservative. 03:19 In order to find the sampling distribution of the difference in the two sample means, if we wanna use the procedures that we're going to outline, we have to have some conditions satisfied just as we have before. 03:29 First, we have to have independent groups. 03:32 So the data in group have to be drawn independently. 03:36 Secondly, randomization. 03:38 The data have to be collected with randomization so we have to take random samples within each group. 03:45 Thirdly, the 10% condition, we've seen this before. 03:48 The sample sizes in each group are less than 10% of their respective population sizes. 03:55 And fourth, the nearly normal condition. 03:58 Both data sets have to look nearly normal. 04:01 If these conditions are satisfied, then the statistic t equals y bar 1 minus y bar 2 minus the difference in the population means divided by the standard error has a t-distribution with number of degrees of freedom given by that crazy formula that we saw a couple of slides ago. 04:18 So let's construct the confidence interval for the difference in means. 04:22 We find that 100 minus 1 alpha percent confidence interval for the difference in the population means by taking y bar 1 minus y bar 2 plus or minus the critical value for t-distribution with the number degrees of freedom that we found times the standard error of the difference in the sample means. 04:39 In the batteries example, let's find the 95% confidence interval for the difference in the mean lifetime of the generic batteries and the mean lifetime of the brand name batteries. 04:48 We have the following summaries from our sample. 04:51 The sample mean for the generic batteries is 206.0167 minutes, the standard deviation in our sample is 10.30193 minutes. 05:02 The mean lifetime in our sample of brand name batteries is 187.4333 minutes with standard deviation 14.61077 minutes. 05:14 So from these and the fact that that we know that the sample sizes are 6 in each group, we can find the degrees of freedom. 05:20 Using that formula, what we get out are 8.99 degrees of freedom which we just round down to 8. 05:27 Then the 95% confidence interval is given by y bar 1 minus y bar 2 plus or minus the critical t-value for 8 degrees of freedom times the standard error. 05:37 So we get 206.0167 minus 187.4333 plus or minus 2.306 times 7.2985 which gives us an interval of 1.7531 up to 35.4137 . 05:54 So what does that tell us? Well what that tells us is that we're 95% confident that the generic battery lasts on average between 1.7531 and 35.4137 minutes longer than the brand name battery. 06:09 So we didn't check the conditions already, we should do that now. 06:13 First we have independent groups. 06:16 It is reasonable to assume that the generic and the brand name batteries are independent. 06:19 So we have that condition satisfied. 06:23 The batteries were chosen randomly in each group so the randomization condition is satisfied. 06:28 The 10% condition, each sample is far less than 10% of the population of batteries of each type. 06:34 So we're good there. 06:36 And the nearly normal condition, both histograms are unimodal and somewhat symmetric. 06:40 So we're okay for the nearly normal condition. 06:44 Now let's perform a hypothesis test. 06:47 Let's use the battery data to construct to the test at a 5% significance level that there is a difference between the mean lifetimes of the two batteries. 06:55 The steps are the same as before and we know the sampling distributions so now we can construct the test. 07:01 So our hypotheses, our null hypothesis is that there is no difference between the mean lifetimes of the batteries. 07:07 So we write mu G minus mu B equals 0. 07:10 And if we're just testing for the difference that signifies that we want a two-tail test. 07:14 So our alternative hypothesis is mu G minus mu B is not equal to 0. 07:20 Checking the conditions, we did this after we form the confidence interval. 07:25 The conditions are the same for the hypothesis test, so we don't need to do anymore work here. 07:30 Now we can compute the test statistic. 07:32 Remember we saw that under the null hypothesis, the statistic t equals y bar G minus y bar B minus 0 divided by the standard error of the difference in the sample means has a t-distribution with 8 degrees of freedom. 07:46 So we compute the t-statistic and we reject the null hypothesis if t is less than -2.306 or if t is greater than positive 2.306 We can find these values in the table. 07:58 What we find is that our test statistic has a value of 2.546. 08:03 So what that tells us is that we need to reject the null hypothesis of the 5% significance level and we conclude then that there is a difference between the mean lifetimes of the generic batteries and the brand name batteries. 08:18 Sometimes we get lucky and we don't have to use that crazy formula for the degrees of freedom. 08:24 If it can be reasonably assumed that the variances or the standard deviations in each population are equal, then we can make things easier on ourselves by pooling the sample variances. 08:35 The pooled t-test comes with an extra condition. 08:38 And that condition is the similar spreads condition. 08:41 What that means is that if the side by side boxplots reveal that the groups have a similar spread, then we can use the pooled t-test. 08:48 So this relies on the pooled standard error. 08:51 How do we find that? First we need to find the pooled variance which we'll call, s squared pooled and again if we let n1 be the sample size in the first group and n2 be the sample size in the second group, then we have the pooled sample variance as n1 minues 1 times s1 squared plus n2 minus 1 times s2 squared all divided by n1 plus n2 minus 2. 09:15 So then the standard error is given by SE pooled of y bar 1 minus y bar 2 is equal to the square root of the pooled variance times 1 over n1 plus 1 over n2. 09:28 In this situation, the test statistic has a t-distribution with n1 plus n2 minus 2 degrees of freedom. 09:35 So the degrees of freedom here are much easier to calculate if we have equal spreads than they were in the case where we can assume that. 09:43 So let's do an example of the pooled t-test. 09:46 We're gonna apply it to the battery data, even though when we look at the boxplots, they don't really seem to show similar spread. 09:53 But what we're gonna do is just to feel feel for the mechanics of the test anyway. 09:57 So our hypotheses are the same as they were before, mu G minus mu B equals 0 is the null hypothesis. 10:04 The alternative being mu G minus mu B not equal to 0. 10:08 Now we need to check the conditions. 10:10 We've already done that except for the similar spreads condition. 10:14 The similar spreads condition doesn't appear to be satisfied, but we're gonna try out the pooled t-test on the battery data just so that we can get a feel for how do it. 10:22 So now we need to find our test statistic. 10:24 And this involves finding the standard error of the difference between y bar G and y bar B So finding the pooled variance, we use that formula that we saw on the previous slide. 10:35 And we get a pooled variance of 159.8022. 10:39 So to get the pooled standard error, we take the square root of the pooled variance times 1 over n1 plus 1 over n2, and that gives us a pooled standard error of 7.975 So we continue the mechanics We know the degrees of freedom are n G plus n B minus 2 which is 10. 10:58 And so the test statistic is y bar G minus y bar B divided by the pooled standard error which gives us 2.3302 So looking at the table, we find that we reject H0 if our test statistic takes a value of less than or equal to minus 2.228 or if our test statistic takes a value larger than positive 2.228 Our test statistic took a value of 2.33. 11:24 So this tells us that we need to reject H0 and conclude that there is a difference in the average battery lifetimes between the generic and the brand name batteries. 11:33 That this is the same conclusion that we got before. 11:36 We got pretty lucky here, since we shouldn't use the pooled t-test in the first place. 11:41 So in the two sample inference, what can go wrong? Well here's some pitfalls to avoid. 11:46 First of all, we need to watch out the groups that are not independent. 11:50 If the groups aren't independent, then we can't use the procedures that we outlined in this lecture. 11:55 We need to look at the plots to check conditions. 11:59 We need to be careful if we apply inference methods where no randomization is applied. 12:04 And finally, we don't want to interpret a significant difference between means or proportions as evidence of cause. 12:10 So what have we done in this lecture? Well we talked about how to carry out a test and form a confidence interval for a difference in population means. 12:18 We did it for the situation where the spreads aren't equal and then we looked at the special case where the spreads can be assumed to be equal and carried out a pooled t-test. 12:27 We close with the discussion of the things that can go wrong and the things we want to avoid into sample inference. 12:33 This is the end of lecture 7 and I look forward to seeing you again for lecture 8.

About the Lecture

The lecture Comparing two Means by David Spade, PhD is from the course Statistics Part 2. It contains the following chapters:

- Comparing Two Means

- The Sampling Distribution

- Check the Conditions

- The Pooled t-Test

Included Quiz Questions

What should be performed before carrying out any inference about the difference between two means?

- The data in each group should be plotted using side-by-side box plots in order to look at the differences in the two distributions.

- The data should be plotted in one boxplot in order to look at the differences in the two distributions.

- Nothing needs to be done before using the two-sample t-procedures.

- The data should be grouped together and examined in one histogram in order to look at the differences between the two groups.

- The sample should undergo subgroup analysis to match age, gender, and other important characteristics.

What is not a condition necessary for a two-sample t-interval?

- Neither data set must look roughly normal.

- The data in each group are drawn independently.

- The data are collected in a suitably random fashion.

- The sample sizes are each less than 10% of the respective population sizes.

- Both data sets must look roughly normal.

When is the pooled t-test appropriate?

- The pooled t-test is appropriate when the spreads in each group are roughly the same and all other conditions for the two-sample t-procedures are satisfied.

- The pooled t-test is appropriate when both groups are independent.

- The pooled t-test is appropriate in any situation in which we are comparing two population means.

- The pooled t-test is appropriate when no distribution appears normal.

- The pooled t-test is appropriate when the spreads differ by a significant amount and all other conditions for the two-sample t-procedures are satisfied.

What is true regarding the differences between the two-sample t-procedures and the pooled t-procedures?

- Once the test statistic is calculated along with the degrees of freedom for the test statistic, the computation of the critical value is done in the same way for each procedure.

- The standard error calculations are the same for each procedure.

- The degrees of freedom are the same for the two procedures.

- The test statistic is calculated in the same way for each procedure, including the standard error calculation.

- The pooled standard error is necessary for both tests.

What is true of two-sample inference?

- A significant difference in means or proportions is not necessarily evidence of cause.

- Looking at plots to check conditions before performing inference will cause problems with the inference procedure.

- Applying the two-sample t-procedures is fine to do even if the data are not suitably randomized.

- It is not appropriate to apply the t-procedures if the groups are not independent.

- Two-sample inference provides a precise value that negates the need for a confidence interval.

For which hypothesis are we likely to use a two-tailed test?

- H1: µ1 - µ2 ≠ 15

- H1: µ1 - µ2 > 15

- H1: µ1 - µ2 < 15

- H1: µ1 - µ2 ≤ 15

- H1: µ1 - µ2 ε 15

A z-distribution is used when the population standard deviation is known and the sample size is greater than what value?

- 30

- 10

- 20

- 40

- 50

Customer reviews

5,0 of 5 stars

| 5 Stars |

|

5 |

| 4 Stars |

|

0 |

| 3 Stars |

|

0 |

| 2 Stars |

|

0 |

| 1 Star |

|

0 |