ChatGPT, as described by itself, “is an AI-powered entity designed to process and generate human-like text based on vast amounts of data and pre-existing knowledge.” ChatGPT and models like it can produce amazing amounts of text and information in seconds, but there are currently limitations in what it can produce (1). Additionally, many educators are worried about how to stop students from using ChatGPT. Rather than devising ways to stop students from using it, this article will suggest how healthcare educators and students can use ChatGPT as a learning tool while considering some of its current limitations.

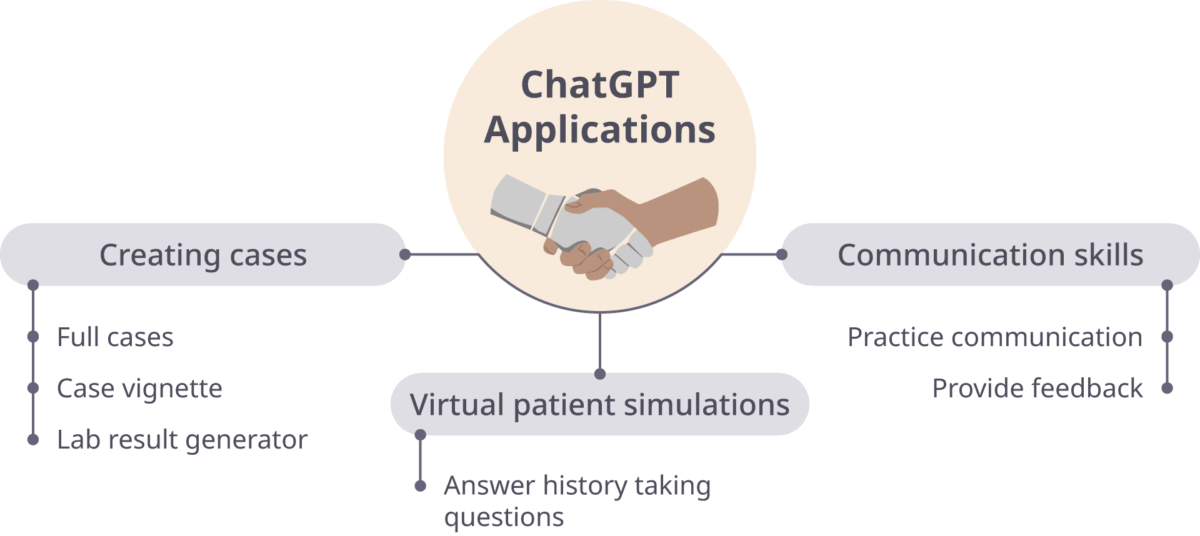

Aside from answering basic questions, ChatGPT can act as a virtual patient simulator, be used for communication practice, create cases and vignettes, and produce lab results. Even in the early stages of development, this capability promises to be a great time saver and tool for both students and educators. While a number of educational and clinical applications are being explored (2), there is much we still don’t know yet. For now, here are a few ways to use ChatGPT that support your existing evidence-based teaching practices and promote effective student engagement.

Communication Skills

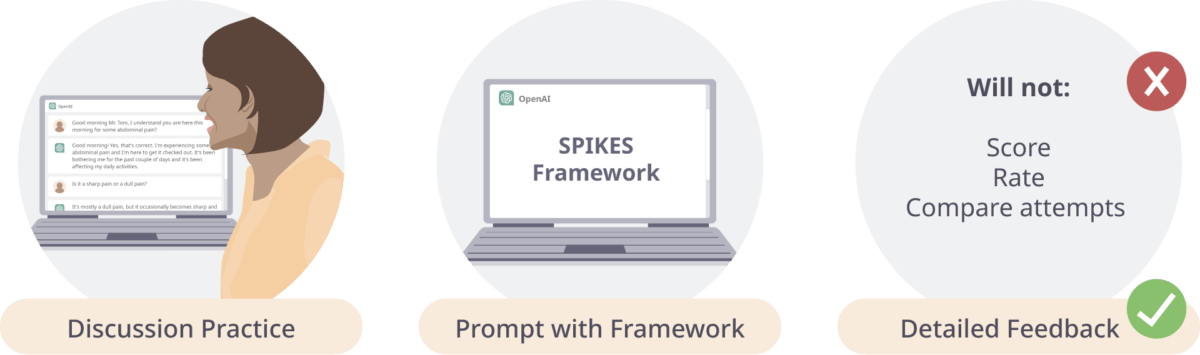

What it can do: Because it is a language prediction model, students can have a discussion with ChatGPT. ChatGPT will roleplay (to an extent; discussed below) which means students can practice communication skills as if talking to a patient, which can be useful especially in pre-clinical and early clinical practice. ChatGPT will also give detailed feedback following the discussion if given a framework, such as SPIKES, telling students where they met the conditions and where conditions were missing and why those conditions are important. For example, “It’s unclear whether you provided Mr. Smith with an opportunity to ask questions or express his concerns about the diagnosis and his prognosis. This step is crucial to engage the patient in the decision-making process and ensure that their needs and preferences are taken into account.”

What it can’t do: ChatGPT will give detailed feedback, but it will not score, rate, or compare attempts. More importantly, ChatGPT does not have emotion, a key component of interacting with patients. Even when bad news is delivered poorly, ChatGPT is polite and optimistic. ChatGPT also anticipates what a physician or nurse wants to hear and gives more information and more accurate terminology than a patient normally would and asks detailed follow-up questions. In this respect, ChatGPT would likely only be useful for early communication practice where the skills are more procedural, such as learning a framework or to prepare at the start of a new rotation for the followup questions patients might ask.

Sample prompts:

- “I will play the role of a doctor and you will play the role of a 20-year-old patient with mild chest pain so I can take your history.”

- “I will play the role of a doctor giving bad news. You will play the role of a 50-year-old patient.”

- After practicing communication with a patient, “Rate my conversation with the patient based on the SPIKES framework.”

Virtual Patient Simulations

What it can do: ChatGPT says it can act as a simulated patient; however, actual results were disappointing. It often gave the entire dialogue between patient and doctor, even with an attempt to train it. However, when prompted “Can you be a virtual patient?” or “Can I ask you questions as if you’re a patient?” ChatGPT does quite well. Learners can give it parameters, such as “a 30-year-old with abdominal pain” and ChatGPT will answer questions as if it were the patient, produce lab results, and give potential diagnoses. This can be useful for learning to take a history, trying to come up with possible diagnoses, and trying to narrow down a potential diagnosis. A student can even ask if a next step or diagnosis is reasonable given the information, although instructors should be aware ChatGPT is careful to not give medical advice, so students may need to “remind” ChatGPT that it is a practice case.

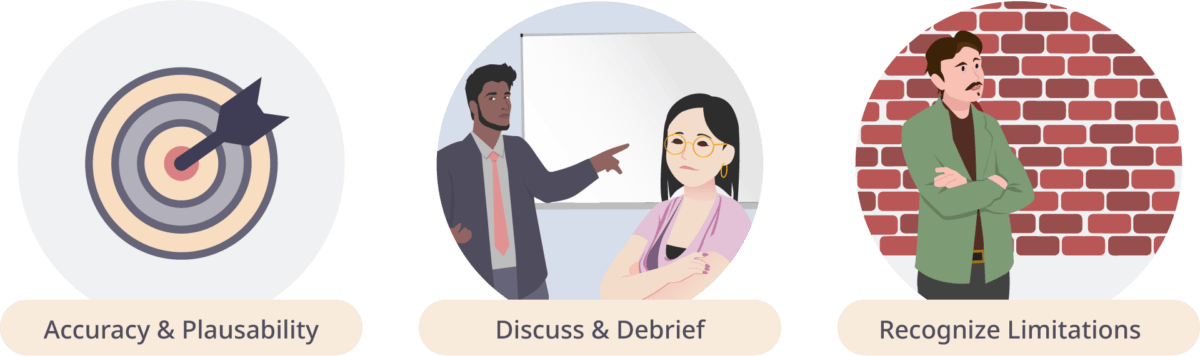

What it can’t do: As mentioned before, ChatGPT will not display emotion as a patient might. It also will not verify any accuracy of medical information given or guessed. A student could give the wrong information or diagnoses and not realize it unless followup or feedback is given from an instructor. ChatGPT might also give inaccurate diagnoses, since it generates content from common or popular information in addition to scientific literature (3). If asked to assess any responses given by the student, ChatGPT’s response may be incomplete (1). Any use of ChatGPT as a virtual patient should always be followed up by a debrief or discussion to clarify any misconceptions.

It may also be difficult to target learning objectives with these exercises. The desired outcome would have to be told to ChatGPT at the start of the exercise, which would reveal the result to the student before they start. ChatGPT can be given broad parameters with many outcomes, but this limits the ability of the educator to ensure learning objectives can be met. One work-around for this limitation is to give ChatGPT a range of options, then have the student ask questions and order labs to determine the right outcome. While giving a range does ensure that learning objectives are met, it limits the options students have to explore. Another limitation is that the student may simply ask ChatGPT for the potential diagnoses, next step, or other information you have tasked them to find. For this reason, it may be best to use these exercises as formative evaluations or discussion starting points.

Sample prompts:

- “ChatGPT, play the role of a patient that is a 30-year-old male with a cough that might be chronic bronchitis, reflux, asthma, or lung cancer.”

- “What would be reasonable diagnostic tests to use in diagnosing this patient?”

- “Would _________ be a reasonable diagnostic test in this case?”

- “Would _________ be a reasonable next step in treating this patient?”

- If ChatGPT refuses to give medical advice, restate the same question starting with “Since this is a practice exercise…”

- “Create a sample OSCE problem.”

Creating Cases

What it can do: ChatGPT can create full cases, vignettes, and lab results instantly and to match almost any desired parameters. For a full case, it will give demographics, chief complaint, physical exam results, diagnostics, and possible diagnoses. For a case vignette, it will give complaint, history, vitals, and physical examination. This capability can be a major time saver for educators over creating or finding existing cases.

What it can’t do: ChatGPT may not retrieve accurate information. Cases will have to be checked thoroughly for inaccuracies. Faculty should be aware that students can put in the same parameters and get the same or similar results, so these may be more useful for discussions, in-class exams, or as part of a larger project.

Sample prompts:

- “Generate a case that could be difficult to distinguish between pneumonia or lung cancer.”

- “Create a case vignette for an adult with difficulty breathing.”

Caution When Using ChatGPT

ChatGPT can be a valuable tool, but caution should be taken when using it, especially in healthcare and healthcare education. When faced with lacking or ambiguous information, ChatGPT may create fictional responses, sometimes called “hallucinations.” This can be especially problematic for advanced applications involving diagnoses or complicated cases (3). For this reason, medical educators using ChatGPT should be aware of its limitations.

- As a medical educator, always verify the plausibility and accuracy of any information you use from ChatGPT such as cases or vignettes.

- If students are given an assignment using ChatGPT, be sure to follow it with a discussion or debrief to clarify any misconceptions, mistakes, or omissions in information the students receive.

- Recognize that the communication skills are currently limited. Communication practice should be limited to procedural skills such as practicing the use of a communication framework or taking a history.

- References created by ChatGPT may be partially or entirely fabricated, despite appearing valid. If ChatGPT or other language models generate a reference, search for the original sources and follow doi links provided to confirm.

- Suggestions made by ChatGPT, either in healthcare or healthcare education, may not be evidence-based because ChatGPT uses the entire internet as a source, not just peer-reviewed scientific material (3). Expertise as an educator and clinician must be applied to responses from ChatGPT.

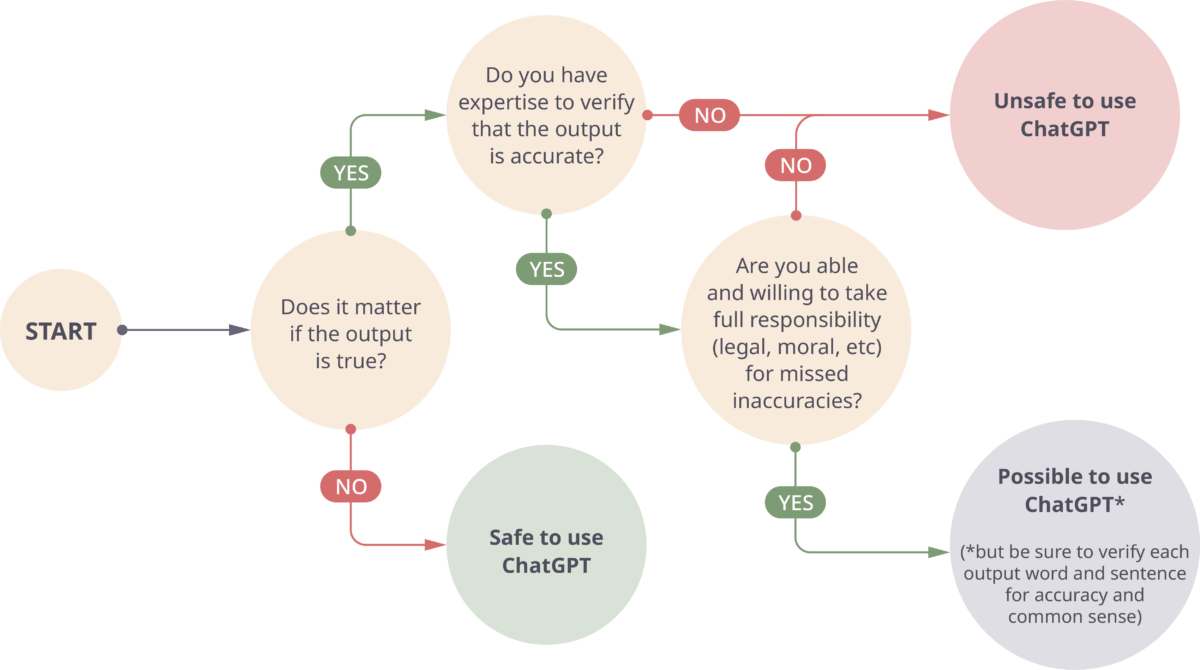

When to Use ChatGPT or Other AI language Models

Using ChatGPT with Caution (4). A flow chart that can help determine if ChatGPT is the right tool for the task (modified from UNESCO).

Powerful models like ChatGPT stand to revolutionize healthcare and healthcare education in ways we might not have even considered yet, but benefits and cautions must be weighed. The flow chart above can help determine if ChatGPT is the right tool for the task (4).

Prompt Tips

ChatGPT and similar models are rapidly changing; what works once might work differently another time. If you find prompts are not giving the results you expect, try some of the following tips:

- Determine in advance what your objectives are and what type of output you want.

- Be concise. Tell ChatGPT exactly what you want and no more.

- Avoid jargon. Use clear, unambiguous language.

- Provide context and relevant key words.

- Break complicated requests into smaller step-wise tasks.

- Tell ChatGPT to adjust its responses if you don’t get the desired response.

- Test your prompts thoroughly before using them in the classroom.